Concurrent programming is a programming paradigm that deals with the execution of multiple tasks or threads simultaneously in a program. The goal of concurrent programming is to utilize a computer's resources more efficiently and to achieve better program performance.

Features

Rust is a language that provides a number of features and constructs to help developers write correct and efficient concurrent programs. Some of the key features of Rust that enable concurrent programming are:

Ownership and borrowing system: Rust's ownership and borrowing system provides a safe and efficient way to share data between threads. This helps to prevent data races and other common issues that arise in concurrent programs.

Rust's type system: Rust's type system provides strong typing and memory safety guarantees that are crucial for writing correct concurrent programs.

Channels: Rust's standard library provides channels, which are a way to communicate between threads safely and efficiently.

Async/await: Rust has first-class support for asynchronous programming through its async/await syntax. This allows developers to write concurrent programs that are more efficient and easier to read.

Mutexes and RwLocks: Rust provides mutexes and read-write locks, which are synchronization primitives used to prevent concurrent access to mutable data.

By using these features and constructs, Rust makes it easy to write efficient and correct concurrent programs. This is one of the many reasons why Rust has become a popular language for building scalable and performant systems.

Concepts

Here are some important concepts of parallel computing and concurrency:

Parallelism: Parallelism is the ability to execute multiple tasks or processes simultaneously. It is a key concept in parallel computing and is used to improve system performance and throughput.

Granularity: Granularity refers to the size of the tasks assigned to processors in a parallel system. There are two types of granularity: fine-grained and coarse-grained. Fine-grained tasks are small and require little processing time, while coarse-grained tasks are larger and require more processing time.

Concurrency: Concurrency is the ability to perform multiple tasks or processes simultaneously. It is a key concept in systems with multiple threads or processes, where concurrency is used to improve performance and responsiveness.

Synchronization: Synchronization is the coordination of multiple threads or processes to ensure they access shared resources in a safe and orderly manner. Synchronization is essential when sharing data between threads or processes and is usually achieved using locks or semaphores.

Deadlock: Deadlock is a situation where two or more threads or processes are blocked waiting for each other to release a resource, resulting in a system-wide halt. Deadlock can occur when synchronization is not properly managed.

Race Condition: A race condition occurs when two or more threads or processes read and write to the same memory location concurrently, resulting in unexpected behavior of the system. Race conditions can be avoided by proper synchronization.

Load Balancing: Load balancing is the distribution of workload among multiple processors or cores in a parallel computing system. Load balancing is crucial to achieve high system performance and to ensure that no processor is idle while others are overloaded.

Parallel Algorithms: Parallel algorithms are algorithms designed to work efficiently in a parallel computing environment. They are optimized for executing multiple tasks concurrently and can achieve significant performance improvements over their serial counterparts.

Concurrency

Concurrency refers to the ability of a system to perform multiple tasks simultaneously or in overlapping time periods. A concurrent system can have multiple independent tasks running, and each task can progress independently from others.

Multi-threading

Multi-threading is the ability to run multiple threads of execution within a single process. Each thread runs independently and shares memory space and system resources with other threads of the same process. Multi-threading is an efficient way to harness the power of modern CPUs, which have multiple cores.

One major advantage of multi-threading is that threads can easily communicate with each other and share resources. The main disadvantage is the possibility of data races, where two or more threads modify the same memory location without proper synchronization.

Multi-processing

Multi-processing is the ability to run multiple independent processes on a system. Each process runs in its own memory space and has its own set of system resources. Multi-processing is a way to achieve full CPU utilization in a system with multiple CPUs or cores.

One major advantage of multi-processing is that it provides better isolation between processes, leading to better fault tolerance. However, inter-process communication can be challenging, and it often requires explicit coding for data sharing.

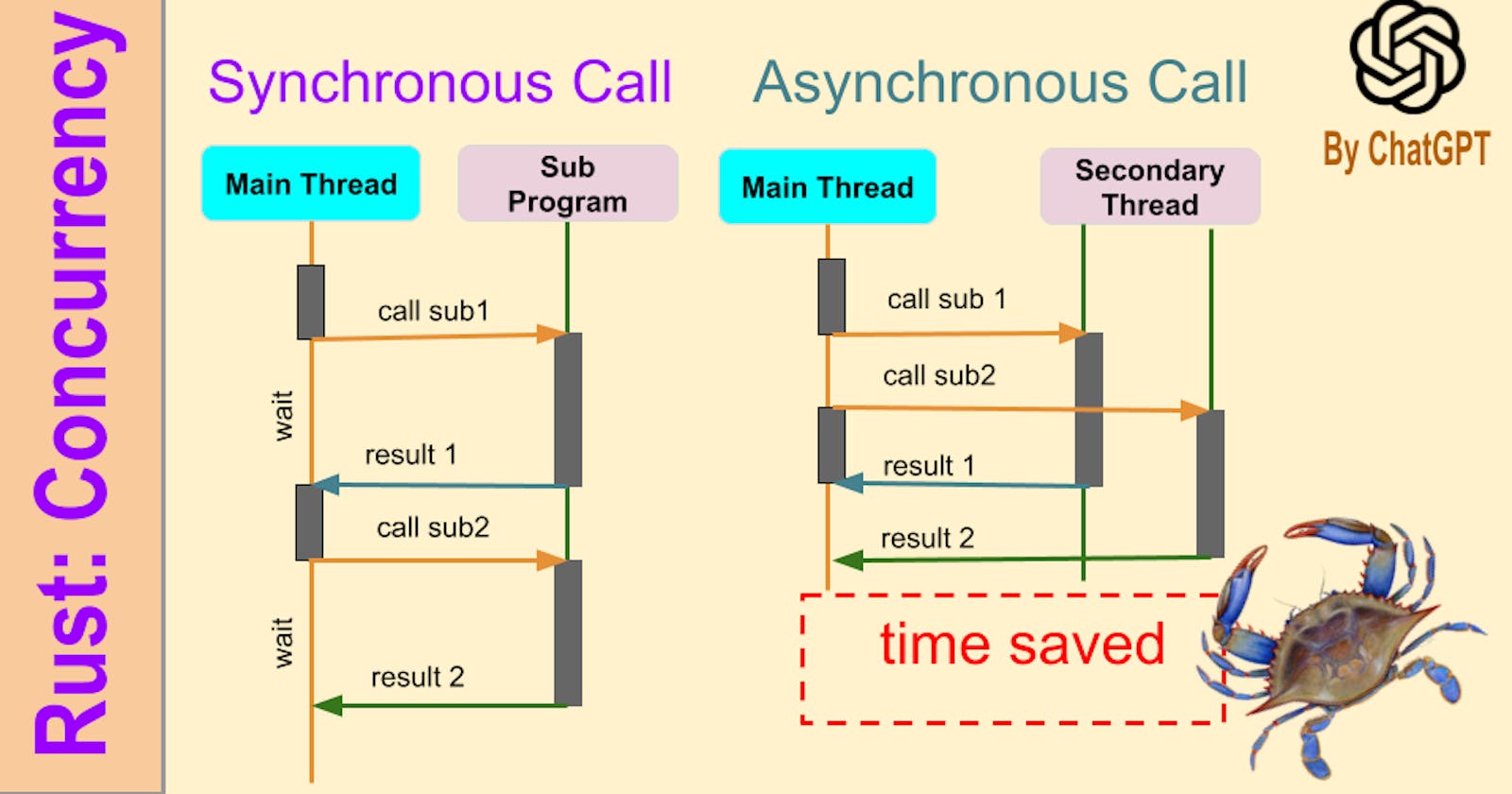

Asynchronous Execution

Asynchronous execution is the execution of code without waiting for the completion of a previous operation. In asynchronous programming, a task is started, and then the program moves on to perform other tasks while waiting for the previously started task to complete. Asynchronous programming can be used to improve performance and responsiveness of systems.

One major advantage of asynchronous programming is that it can reduce wait times and thus improve overall system throughput. The main disadvantage is that async programming can be more difficult to reason about than synchronous programming because of its non-linear flow of control.

Concurrency & Parallelism

Concurrency and parallelism are terms that are commonly used interchangeably, but they have slightly different meanings in the context of programming and algorithm design.

Concurrency

Concurrency refers to the ability of a program to run multiple tasks at the same time, even if they are not physically running in parallel. In other words, concurrency enables a program to perform multiple tasks simultaneously by breaking them down into smaller sub-tasks, allowing different parts of the program to be executed concurrently.

Concurrency, is more efficient in situations where tasks are I/O bound, such as when waiting for user input or accessing external resources. With I/O bound tasks, concurrency can allow a program to continue executing other tasks without waiting for the I/O operation to complete, resulting in improved performance.

Parallelism

Parallelism, on the other hand, refers to the ability of a program to run multiple tasks simultaneously by physically running them on separate processing units, such as multiple cores of the CPU or multiple machines. In parallel computing, the program is designed to work on separate parts of input data in parallel, allowing multiple tasks to be executed simultaneously and enabling faster execution times.

Parallelism is generally more efficient than concurrency in Rust algorithms, where the tasks are computationally intensive and require significant processing power. Parallelism maximizes the utilization of available resources by running tasks on multiple cores or machines, resulting in faster execution times.

Best Practices:

Use Rust's concurrency primitives: Rust has several built-in concurrency primitives, such as channels, mutexes, and atomic variables, that enable easy and efficient synchronization between threads. Using these primitives instead of rolling your own synchronization solutions can help avoid many common pitfalls.

Use Rust's thread pool: Rust provides a built-in thread pool through its

rayoncrate, which can be used to parallelize computation-intensive tasks across multiple CPUs. Work with this crate and utilize its thread pool capabilities.Share data safely: Sharing data between threads can lead to issues like race conditions and data inconsistencies. Rust provides mechanisms such as mutexes and atomic variables to share data safely. It's important to keep shared data protected with the right mechanism to avoid mistakes.

Understand ownership and borrowing: In Rust, ownership and borrowing rules prevent data races, but they can also make concurrent code more challenging to write. It is important to follow Rust's ownership and borrowing rules to avoid bugs in the code.

Pitfalls to Avoid:

Deadlocks: A deadlock occurs when multiple threads are waiting for each other to release resources. Deadlocks can occur if a thread waits for a lock but never releases it, or if two threads are competing for the same resources. Deadlocks are difficult to debug, so it's essential to avoid them as much as possible.

Data races: A data race occurs when two or more threads access the same shared data simultaneously, with at least one thread writing to the data. Data races can lead to data inconsistencies, crashes, and undefined behavior. Locks and other sync primitives can help avoid data races.

Blocking code: Blocking code can lead to poor parallelism and decreased performance. Long-running IO operations, such as reading from a file or waiting for network requests, can block threads and prevent other tasks from executing. It is essential to use asynchronous programming to avoid blocking code when possible.

Overuse of synchronization: Using excessive synchronization can also lead to poor performance. Synchronization can be expensive, so it's important to minimize its use to only the necessary code.

Example using Mutex:

As an example, consider the following Rust code:

use std::sync::{Arc, Mutex};

use std::thread;

fn main() {

let counter = Arc::new(Mutex::new(0));

let mut handles = vec![];

for _ in 0..10 {

let counter = Arc::clone(&counter);

let handle = thread::spawn(move || {

let mut num = counter.lock().unwrap();

*num += 1;

});

handles.push(handle);

}

for handle in handles {

handle.join().unwrap();

}

println!("Result: {}", *counter.lock().unwrap());

}

Notes: This program shows how to use a mutex to synchronize access to a shared variable in a concurrent Rust program. The Arc type is used to share ownership of the mutex between all the threads. In each thread, the mutex is locked before the shared variable is incremented, ensuring that each thread updates the variable atomically.

Design Patterns

In Rust, design patterns for parallel computing and concurrency are used to solve challenges and improve the scalability and performance of concurrent applications. Here are some popular design patterns for parallel computing and concurrency in Rust:

Thread pool: is a common pattern for managing concurrent workloads in Rust. It involves creating a pool of threads and distributing work across them. This reduces the overhead of creating and destroying threads, allowing for more efficient execution of parallel tasks.

Futures: are a lightweight abstraction for representing async computations. In Rust, Futures provide a flexible and composable way to handle asynchronous events and help in building highly concurrent and scalable systems.

Actor Model: is a design pattern for building highly concurrent and fault-tolerant systems. In Rust, the actix framework provides an implementation of this pattern, allowing for the creation of highly concurrent and fault-tolerant systems.

Map-Reduce: pattern is used for processing large amounts of data in parallel. In Rust, this pattern can be implemented using libraries like Rayon or written from scratch using Rust's built-in concurrency primitives.

Lock-free data structures: are designed to allow multiple threads to access shared data structures in a lock-free manner. In Rust, the crossbeam library provides lock-free data structures and offers low-level primitives to avoid data races.

Overall, Rust provides a variety of design patterns and tools that help developers write highly concurrent and efficient applications. These patterns help in designing clean and robust parallel computations, improving the resource utilization of the application while ensuring safety and scalability.

Use-Cases

The Producer-Consumer pattern is useful for processing large datasets, the Map-Reduce pattern is useful for parallel data processing, the Futures pattern is useful for asynchronous I/O, and the Actor Model pattern is useful for creating large-scale concurrent systems.

Producer-Consumer

The Producer-Consumer concurrency pattern involves dividing work between two types of threads: producers and consumers. Producers generate data and send it to a shared buffer, while consumers read the data from the buffer and process it. This pattern is useful in situations where a large amount of data needs to be processed, such as processing log files or conducting data analysis on large datasets.

In Rust, the Producer-Consumer concurrency pattern can be implemented using channels. The producers use one end of the channel to send data to the consumers, while the consumers use the other end of the channel to receive data.

Map-Reduce

The Map-Reduce concurrency pattern involves breaking down a large dataset into smaller chunks, processing each chunk in a parallel fashion, and then combining the results into a final result. This pattern is useful in situations where a large amount of data needs to be processed, such as data analysis or image processing.

In Rust, the Map-Reduce concurrency pattern can be implemented using the Rayon crate, which provides a high-level API for parallel data processing.

Futures

As mentioned early, the Futures concurrency pattern is a form of concurrency that is based on promises or futures. A future is a container for an as-yet-undeveloped value, and allows concurrent code to continue executing until the value is needed. This pattern is useful in situations where code needs to execute concurrently, but a result may not be immediately available, such as when waiting for a network request to complete or when reading from a file.

In Rust, the Futures concurrency pattern can be implemented using the futures-rs crate, which provides a framework for writing asynchronous code using futures.

Actor Model

The Actor Model concurrency pattern involves creating actors, which are independent units of computation that communicate through message passing. Each actor has its own private state and responds to messages that are sent to it, without sharing state with other actors. This pattern is useful in situations where large-scale concurrency is required, such as in web applications or distributed systems.

In Rust, the Actor Model concurrency pattern can be implemented using the Actix actor framework, which provides a high-level API for creating and managing actors.

Parallel computing

There are several design patterns that can be used in Rust for parallel computing based on the specific requirements of the application. Here are a few patterns along with examples:

1. Data Parallelism Pattern

In this pattern, a large dataset is split into smaller chunks, which are processed in parallel by multiple threads. The results of each chunk are then combined to produce the final output. Rust provides a built-in iter parallel API for this purpose, which can be used to parallelize operations on collections. For example, to parallelize the filtering operation on a large vector of integers:

use rayon::prelude::*;

fn main() {

let data = (0..10000).collect::<Vec<_>>();

let filtered_data = data.par_iter().filter(|&x| x % 2 == 0).collect::<Vec<_>>();

println!("{:?}", filtered_data);

}

This code uses the Rayon crate to parallelize the filter operation on the data vector using the par_iter method. Each element of the vector is processed in parallel by different threads, and the filtered elements are collected into a new vector.

2. Producer-Consumer Pattern

In this pattern, tasks are split into two parts - a producer that generates tasks and a consumer that processes them in parallel. The producer places tasks into a shared queue, which the consumer reads from and processes the tasks. Rust provides the std::sync::mpsc module for message passing between threads, which can be used to implement this pattern. For example, to implement a simple task queue that generates random numbers using the producer-consumer pattern:

use std::sync::mpsc::{channel, Sender, Receiver};

use std::thread;

use rand::Rng;

fn main() {

let (tx, rx): (Sender<i32>, Receiver<i32>) = channel();

let producer_thread = thread::spawn(move || {

let mut rng = rand::thread_rng();

for i in 0..10 {

tx.send(rng.gen_range(1..101)).unwrap();

println!("Producer: Task {} generated", i + 1);

thread::sleep_ms(100);

}

});

let consumer_thread = thread::spawn(move || {

for i in 0..10 {

let task = rx.recv().unwrap();

println!("Consumer: Task {} processed with value {}", i + 1, task);

thread::sleep_ms(500);

}

});

producer_thread.join().unwrap();

consumer_thread.join().unwrap();

}

This code uses the std::sync::mpsc module to implement a simple task queue in which the producer generates 10 random numbers and places them into the queue using the send method, and the consumer reads from the queue using the recv method and processes the tasks.

3. MapReduce Pattern

In this pattern, a large dataset is split into smaller chunks, which are processed in parallel by multiple nodes using a map operation. The results of the map operation are then combined using a reduce operation to produce the final output. Rust provides the MapReduce crate for this purpose, which can be used to parallelize map-reduce operations on datasets. For example, to implement a simple word count program using the map-reduce pattern:

use map_reduce::MapReduce;

fn main() {

let data = vec![

"hello world",

"hello rust",

"hello programming",

"world rust",

"world programming",

"programming rust",

"rust programming",

"rust world",

];

let results = MapReduce::new(data, 2)

.map(|s| s.split_whitespace().map(|w| (w.to_owned(), 1)).collect::<Vec<_>>())

.reduce(|a, b| {

let mut map = a.clone();

for (k, v) in b {

let count = map.entry(k).or_insert(0);

*count += v;

}

map

});

println!("{:#?}", results);

}

This code uses the MapReduce crate to parallelize the word-count operation on a dataset using the map and reduce methods. Each data item is split into words using the split_whitespace method, and the word count is computed using a simple map-reduce operation. The MapReduce crate internally uses the producer-consumer pattern and the data parallelism pattern to parallelize the map and reduce operations.

Non-blocking

Non-blocking operations are those that return immediately, without waiting for a result to be computed. Non-blocking operations are useful when you want to avoid blocking the calling thread, so that other computations can continue while the non-blocking operation is in progress. Instead of waiting for a result, you can provide a callback or use another mechanism to be notified when the operation has completed.

By allowing IO operations to execute asynchronously, we can improve the responsiveness of our code and prevent threads from blocking while waiting for IO operations to complete.

Use-Case:

Consider an example of reading from a file. If you use a blocking operation to read from a file, your code will wait until the entire file has been read before continuing. But if you use a non-blocking operation, your code can continue to execute while the file is being read, and it can be notified when the read operation has completed. This can improve the responsiveness of your application, particularly in cases where file reads or network I/O can take a long time.

Implementation:

In Rust, non-blocking IO can be implemented using Futures, a system for asynchronous programming. Here's a simplified example that demonstrates how Futures can be used to perform non-blocking IO:

use futures::future::Future;

use std::fs::File;

use std::io::{self, BufReader};

use tokio::runtime::Runtime;

fn main() {

let rt = Runtime::new().unwrap();

let future = open_file();

rt.block_on(future).unwrap();

}

fn open_file() -> impl Future<Item = (), Error = io::Error> {

// Open a file in non-blocking mode

let file = File::open("example.txt").unwrap();

let reader = BufReader::new(file);

// Read the contents of the file in non-blocking mode.

// This will return a future that will be executed asynchronously.

let contents = tokio::io::read_to_end(reader, vec![]);

// Once the future has completed, print the contents of the file

let print_contents = contents

.map(|(_, data)| println!("Contents: {:?}", data))

.map_err(|e| println!("Error reading file: {:?}", e));

print_contents

}

In this example, we use the Rust Futures library to perform non-blocking IO. Here's a breakdown of what is happening:

We import the necessary libraries, including Futures and necessary IO modules.

In the main function, we create a new Runtime instance from the Tokio library.

In the open_file function, we begin by opening a file in non-blocking mode. Notice that we do not use the

asyncandawaitkeywords - this is intentional, since we can also use Futures for non-async operations.After opening the file, we create a buffered reader that will read from the file.

Next, we call the

tokio::io::read_to_end()function, which returns a future that will be executed asynchronously. This function reads the entire contents of the buffered reader into a vector.Finally, we map over the future that was returned by

read_to_end(). If the future completes successfully, we print the contents of the file. If it encounters an error, we print the error.

Note: When we run this code, we create an instance of the Tokio runtime and use it to run the Future returned by the open_file() function. This allows us to perform non-blocking IO, since the Future will execute asynchronously while the main thread of the program continues to execute.

Concurrency

Concurrency is the ability for a program to execute multiple tasks concurrently. Rust provides built-in support for concurrency, making it easy to write concurrent programs that take advantage of multiple CPU cores to execute tasks faster. Here are some examples in Rust that explain concurrency concepts using simple examples:

1. Threads

One of the simplest ways to implement concurrency in Rust is by using threads. Threads are an independent sequence of execution within a program. A Rust program can spawn multiple threads to execute code concurrently. Mutexes are used for synchronization between different threads.

use std::sync::{Arc, Mutex};

use std::thread;

fn main() {

let shared_data = Arc::new(Mutex::new(0)); // Shared data between threads

let mut threads = vec![];

for i in 0..10 {

let data = Arc::clone(&shared_data);

let thread = thread::spawn(move || {

let mut val = data.lock().unwrap(); // Lock the mutex to access shared data

*val += i;

});

threads.push(thread);

}

for thread in threads {

thread.join().unwrap(); // Wait for all threads to finish

}

println!("Shared data = {:?}", shared_data);

}

In this example, we create a Mutex to share an integer variable between 10 threads. Each thread increments the shared integer variable by a certain amount. We use Arc to share the ownership of the Mutex between different threads. The lock() method of the Mutex is used to acquire a lock on the shared data so that only one thread can access the data at any given time.

2. Channels

Channels are another way to implement concurrency in Rust. Channels are used to transmit data between threads. A channel has two ends: a sender and a receiver. A Rust program can spawn multiple threads that communicate with each other through channels.

use std::sync::mpsc::{channel, Sender};

use std::thread;

use std::time::Duration;

fn main() {

let (sender, receiver) = channel::<i32>(); // Create a channel to transmit integers

let thread1 = thread::spawn(move || {

for i in 0..10 {

sender.send(i).unwrap(); // Send integer through the channel

thread::sleep(Duration::from_millis(100));

}

});

let thread2 = thread::spawn(move || {

for i in 0..10 {

let received = receiver.recv().unwrap(); // Receive integer from the channel

println!("Received integer: {}", received);

}

});

thread1.join().unwrap();

thread2.join().unwrap();

}

Notes: In this example, we create a channel of type i32. We then spawn two threads: one thread sends integers through the channel, and the other thread receives integers from the channel. The send() method of the channel is used to transmit data, and the recv() method is used to receive data from the channel. The threads communicate with each other through the channel, allowing multiple tasks to be executed concurrently.

These are just a few examples of how you can implement concurrency concepts in Rust using threads and channels. Rust also provides other concurrency constructs such as semaphores, barriers, and atomics that you can use to implement more complex concurrent programs.

Conclusion

Rust offers developers multiple ways to achieve better performance and efficiency by providing support for concurrency, asynchronous execution, and parallelism. However, these techniques also add complexity to the codebase, and managing this complexity can be a challenge. Rust's ownership rules and well-defined abstractions make it easier to manage this complexity.

In Rust, concurrency is achieved through the use of threads and synchronization primitives, such as mutexes or channels. Rust's ownership rules make it easier to write concurrent applications without worrying about data races or deadlocks.

Asynchronous execution in Rust is achieved through the use of Futures, which are a lightweight abstraction for representing async computations. Rust Futures use a combinators-based approach, making it easy to compose and chain async operations. Rust's async/await syntax provides a high-level abstraction for writing asynchronous code that is easier to reason about and maintain.

Parallelism in Rust can be achieved through the use of Rayon, a high-level parallelism library that provides convenient abstractions for parallelizing operations over collections. Rust's multi-threaded model allows Rayon to take advantage of multiple CPU cores effectively, resulting in significantly better performance.

With careful consideration of the trade-offs, developers can take advantage of Rust's concurrency and asynchronous features to improve the performance and efficiency of their software applications.

Disclaimer: The information and content provided about parallel computing and concurrency in Rust are generated using AI. While I strive to provide accurate and relevant information. Parallel computing and concurrency can be complex and error-prone topics, so caution must be taken when implementing parallel solutions.

Good job, now you know parallel computing in Rust. 🍀